Adam Marblestone at Asterisk:

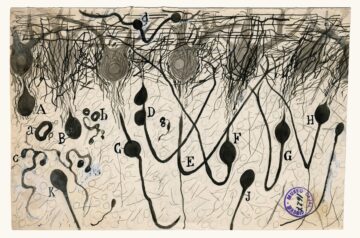

In the early years of modern deep learning, the brain was a North Star. Ideas like hippocampal replay — the brain’s way of rehearsing past experience — offered templates for how an agent might learn from memories. Meanwhile, work on temporal-difference learning showed that some dopamine neuron responses in the brain closely parallel reward-prediction errors — solidifying a useful framework for reinforcement learning.

In the early years of modern deep learning, the brain was a North Star. Ideas like hippocampal replay — the brain’s way of rehearsing past experience — offered templates for how an agent might learn from memories. Meanwhile, work on temporal-difference learning showed that some dopamine neuron responses in the brain closely parallel reward-prediction errors — solidifying a useful framework for reinforcement learning.

DeepMind’s 2013 Atari-playing breakthrough was perhaps the high-water mark of brain-inspired optimism. The system was in part a digital echo of hippocampal replay and dopamine-based learning. DeepMind’s CEO gave talks in the early days with titles like “A systems neuroscience approach to building AGI.”

By around 2020, though, many in AI had accepted what Rich Sutton in 2019 called the “bitter lesson”: Simple general-purpose methods powered by massive compute and data outperformed hand-crafted details, whether brain-inspired or otherwise. “Scaling laws” for transformer-based language modeling — an architecture that owes little to the brain — showed a path to vastly improved performance. And, of course, it didn’t help that our knowledge of neuroscience was, and is still, primitive.

But I believe the brain may have something more to teach us about AI — and that, in the process, AI may have quite a bit to teach us about the brain.

More here.

Enjoying the content on 3QD? Help keep us going by donating now.