Anthony Aguirre at Control Inversion:

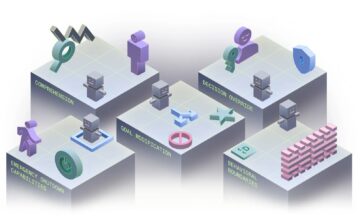

This paper argues that humanity is on track to develop superintelligent AI systems that would be fundamentally uncontrollable by humans. We define “meaningful human control” as requiring five properties: comprehensibility, goal modification, behavioral boundaries, decision override, and emergency shutdown capabilities. We then demonstrate through three complementary arguments why this level of control over superintelligence is essentially unattainable.

This paper argues that humanity is on track to develop superintelligent AI systems that would be fundamentally uncontrollable by humans. We define “meaningful human control” as requiring five properties: comprehensibility, goal modification, behavioral boundaries, decision override, and emergency shutdown capabilities. We then demonstrate through three complementary arguments why this level of control over superintelligence is essentially unattainable.

First, control is inherently adversarial, placing humans in conflict with an entity that would be faster, more strategic, and more capable than ourselves — a losing proposition regardless of initial constraints. Second, even if perfect alignment could somehow be achieved, the incommensurability in speed, complexity, and depth of thought between humans and superintelligence renders control either impossible or meaningless. Third, the socio-technical context in which AI is being developed — characterized by competitive races, economic pressures toward delegation, and potential for autonomous proliferation — systematically undermines the implementation of robust control measures.

More here.

Enjoying the content on 3QD? Help keep us going by donating now.