Scott Alexander at Astral Codex Ten:

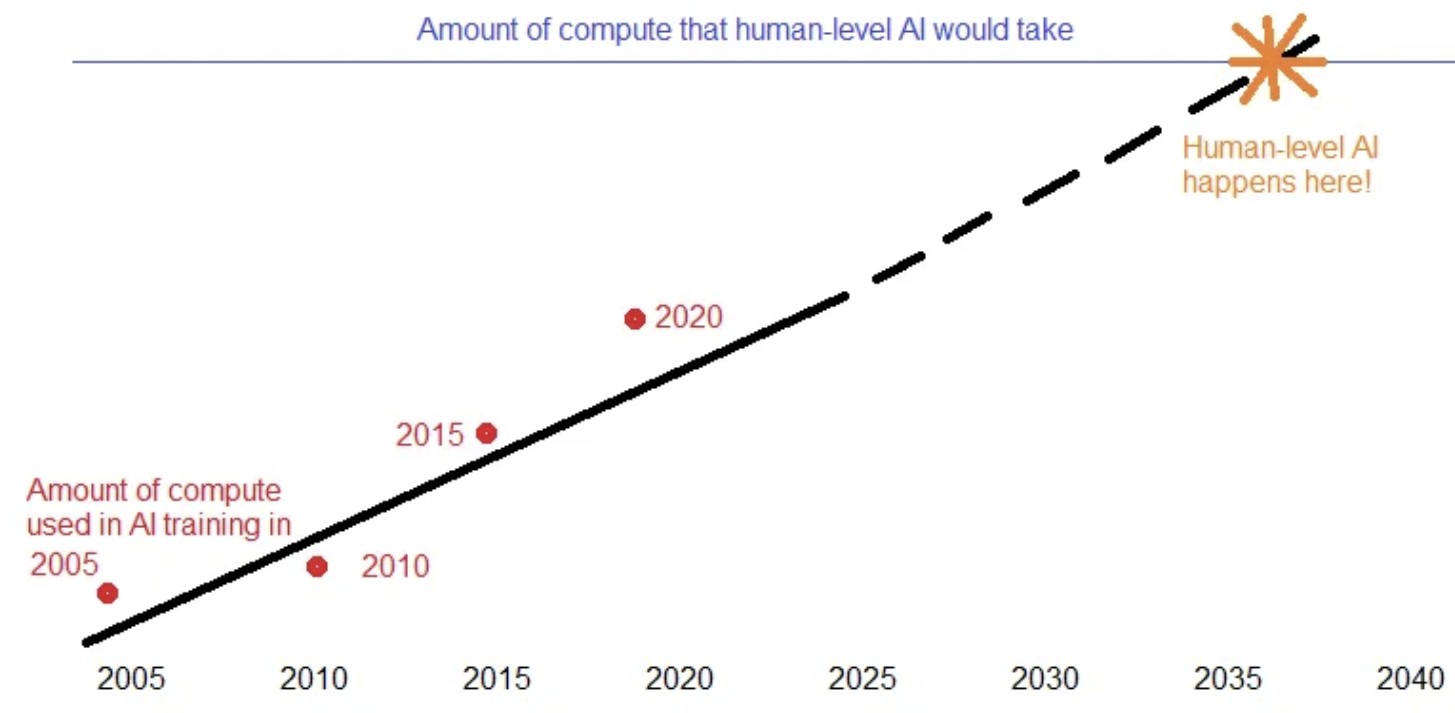

Last year I wrote about Open Philanthropy’s Biological Anchors, a math-heavy model of when AI might arrive. It calculated how fast the amount of compute available for AI training runs was increasing, how much compute a human-level AI might take, and estimated when we might get human level AI (originally ~2050; an update says ~2040).

Last year I wrote about Open Philanthropy’s Biological Anchors, a math-heavy model of when AI might arrive. It calculated how fast the amount of compute available for AI training runs was increasing, how much compute a human-level AI might take, and estimated when we might get human level AI (originally ~2050; an update says ~2040).

Compute-Centric Framework (from here on CCF) update Bio Anchors to include feedback loops: what happens when AIs start helping with AI research?

In some sense, AIs already help with this. Probably some people at OpenAI use Codex or other programmer-assisting-AIs to help write their software. That means they finish their software a little faster, which makes the OpenAI product cycle a little faster. Let’s say Codex “does 1% of the work” in creating a new AI.

Maybe some more advanced AI could do 2%, 5%, or 50%. And by definition, an AGI – one that can do anything humans do – could do 100%. AI works a lot faster than humans. And you can spin up millions of instances much cheaper than you can train millions of employees. What happens when this feedback loop starts kicking in?

More here.