by Tim Sommers

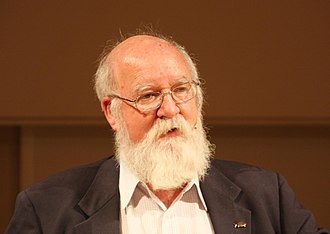

Widely-respected philosopher, Tufts professor, one of the “Four Horsemen of the Apocalypse” of the New Atheists’ movement, and an “External Professor” for the prestigious Santa Fe Institute, Daniel Dennett recently took to the very public soap box of The Atlantic to issue a dire warning.

“Today, for the first time in history, thanks to artificial intelligence, it is possible for anybody to make counterfeit people who can pass for real in many of the new digital environments we have created. These counterfeit people are the most dangerous artifacts in human history, capable of destroying not just economies but human freedom itself. Before it’s too late (it may well be too late already) we must outlaw both the creation of counterfeit people and the ‘passing along’ of counterfeit people. The penalties for either offense should be extremely severe, given that civilization itself is at risk.”

“The most dangerous artifacts in human history?” What about gunpowder, the printing press, chemical weapons, fission bombs, fusion bombs, biological weapons, machines that burn massive amounts of fossil fuels and dump carbon into the atmosphere, Adam Sandler movies, social media in general, and TicTok in particular?

Before I say anything else I should say that Dennett is not only a brilliant philosopher, but also a charming, affable, and generous person. At public events he seems to make a special effort to seek out and spend time with students. I could say more, but you get the idea.

Having said that, what is up with AI making very smart people lose their minds? (See also, Maciej Ceglowski’s aptly titled, “Superintelligence: The Idea the Eats Smart People.” (Slides here.) ) I think Dennett is very, and weirdly, wrong here. Weirdly, I’ll save for last, but basically this “counterfeit” people thing seems to be in tension with his own views. What he is very wrong about, however, I believe, is the nature of the threat. The harms he lays out are not specific to AI and, in fact, many have already happened . Further, it’s not clear that AI is an apocalyptic force multiplier on this front. Or so I’ll argue.

Why does “Creating counterfeit digital people risk…destroying our civilization”? I am happy that Dennett has not fallen for the “AIs will either take over or destroy the world” line, but “destroying…human freedom itself” might be worse. So, what’s the problem? Dennett says that “By allowing the most economically and politically powerful people, corporations, and governments to control our attention, these systems will control us. Counterfeit people, by distracting and confusing us and by exploiting our most irresistible fears and anxieties, will lead us into temptation and, from there, into acquiescing to our own subjugation.” But, wait, what does any of that have to do with AIs or “counterfeit people?” Hasn’t this already happened?

Let’s pull two issues apart.

First, in terms of controlling our attention, hasn’t that already occurred without AIs? This is a real, very serious problem, and AIs may make it worse, but it doesn’t really hinge on AIs. If this is the problem with AIs, doesn’t that mean that without the internet in general, and social media specifically, this “controlling of our attention” wouldn’t be possible? Why pick on AIs? Blame Face-Tok-agram.

Second, drop “attention” and read Dennett again. With AIs “the most economically and politically powerful people, corporations, and governments…will control us.” What do you mean will? As a political philosopher, I have to ask, who exactly do you think has all the power right now? What new power will AI give them? (See, the previous paragraph again on attention, controlling.)

To be clear, I have no idea how bad the AI misinformation/persuasion situation might get. I just think this apocalyptic thinking is radically under justified at this point – and being endlessly repeated at us as what all the smart people already know. Meaning many of the smartest people on the planet are laser-focused, not on a war where one side has nukes, the rise of fascism and destabilization of American democracy, the planet’s ongoing and potentially apocalyptic climate changes, or persistent and devastating economic inequality across the globe, but on whether the machines they are building for profit might be dangerous. ‘Please, stop us before we destroy the world!’ they shout while banking billions. (Obviously, this last bit is not Dennett’s fault.)

Dennett says “Another pandemic is coming, this time attacking the fragile control systems in our brains—namely, our capacity to reason with one another…” That seems an overly dramatic way of saying that there will be tons of AI grifting soon. Overly dramatic, especially, since there’s already plenty of nonAI (and probably some AI) grifting going on right now. (Maybe, he’s taking the “superintelligence” thing seriously and saying AIs will not just distract and persuade, but will be orders of magnitude more persuasive than ordinary people. I see little evidence for this view.)

But here’s the weird bit about Dennett taking this position. Dennett has this really interesting view of the mind based on the idea that there are “three stances” we can take in understanding something and predicting what it will do. We could use the “physical stance” to try to predict the behavior of, for example, a ball. Basically via geometry or the rules of physics or more informal observed-bouncing-heuristics, we can calculate where the ball will end up after we bounce it.

The design stance tries to predict the behavior of, say a watch or an app on a smartphone, asking what it was made for and, therefore, how it might have been constructed, or coded, to achieve what it was designed to do, and so what it will do next.

Finally, the intentional stance treats the subject as having beliefs, desires, intentions, and a certain degree of rationality. Obviously, we take the intentional stance towards other people. But Dennett says we can also profitably take the intentional stance with a computer chess program or an AI.

Here’s the twist. According to Dennett there is nothing to being intentional beyond it being predictive for others to take an intentional stance towards you/it. One of his earlier books was titled “Consciousness Explained,” but it is often referred to as “Consciousness Explained Away” by people who don’t find this approach convincing.

This view has a surprising amount of overlap with Heidegger’s view in Being and Time, by the way. Heidegger says we are aware at first of ourselves as conscious beings, then we become aware of objects that we use as tools, and only when objects break, or fail to function the way we want them to as tools, do we “see” the larger physical world that frames “equipment” or the “ready-to-hand” (his words for tools) and Dasein (us). I don’t know how much of this even makes sense. Heidegger is legendarily obscure (and was a Nazi). But I think the fact that he moves in the opposite direction as Dennett is interesting. Starting from something like consciousness maybe it makes more sense, when confronted with an AI, to ask, first, is it really conscious like us?

But Dennett deals away the most straightforward version of this possibility. Since there’s nothing to the question of whether the AI is intentional or not except whether we can predict its behavior by taking an intentional stance towards it, why think AIs that are intentional enough are only “counterfeit” people? He argues that they are problematic because they “fool” us into taking them for intentional beings. But aren’t they? Or, at least, wouldn’t they be, if they could consistently fool us in this way? Dennett seems to have basically a Turing-Test for intentionally, but then still wants somehow to say that some beings can pass the test while also remaining fake. Shouldn’t they have failed if they are fake? Or if not, shouldn’t we admit the test doesn’t work.

I can see a couple of ways to object. Maybe, Dennett just means by counterfeit that they don’t really have a body or sensory input or something. But that doesn’t seem relevant if they can present themselves as thoroughly intentional. Maybe, he just means they appear intentional, but they are not – or they are not as intentional as a human being. There’s nothing contradictory in that. But that position is in tension with his current view.

What I mean is that when Dennett writes that “Our natural inclination to treat anything that seems to talk sensibly with us as a person—adopting what I have called the ‘intentional stance’ —turns out to be easy to invoke and almost impossible to resist…” This is the first time I have ever heard him even hint that it should be resisted even though in this context it clearly works the best. Imagining predicting the behavior of even a simple AI from the design or physical stances alone. I don’t know how he can can take this position except by saying that there is something more to intentionally than he has previously allowed.

One well-known philosopher, David Wallace, who almost certainly knows more about Dennett (and philosophy) than I do (so keep that in mind), rejected this position in the comment thread on a well-known philosophy blog. I won’t quote or paraphrase him without permission (see the thread for yourself), but I’ll just say what I got out of it was two ways to distinguish counterfeit persons from regular old ones. One way is that they are weakly intentional when compared to us. We are strongly intentional. The other is that they could be intentional, but not conscious (among other things that a person might be).

On the one side, to the extent that these systems don’t need to be sophisticated enough to be any more than “weakly intentional” it’s hard, as I say above, to think there’s a new threat. AIs are already plenty sophisticated enough to fool most people in many circumstances. If there’s an apocalyptic threat from this, it’s already here. But to me it still feels like Monday.

On the other side, if we are waiting for the true harbingers of doom in the form of strongly intentional systems, I don’t think we can call those counterfeit when they arrive. If they are intentional enough, then they are as intentional as you can get – since being intentional is just being the kind of being that is predictable from the intentional stance. And, as for consciousness, I don’t know what Dennett thinks, but I can’t see how a being can be strongly intentional and not be conscious. In fact, I thought the point of the view was to give a deflationary account of consciousness. (Wallace says that’s wrong.)

To finish where we started, recall Dennett says that “Today, for the first time in history, thanks to artificial intelligence, it is possible for anybody to make counterfeit people who can pass for real…” Is that right? Anybody? According to some reports, ChatGPT costs $7000,000 a day to maintain. If your purpose is to spread misinformation and fool people online, you can hire an awful lot of help with that much money. And rich people’s ability to hire a lot of help, so far, has always been a bigger problem than any kind of technology.