by Chris Horner

The illusion of rational autonomy

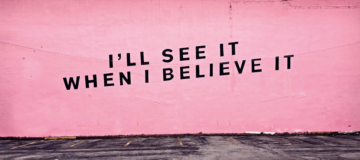

The world is full of people who think that they think for themselves. Free thinkers and sceptics, they imagine themselves as emancipated from imprisoning beliefs. Yet most of what they, and you, know comes not from direct experience or through figuring it out for oneself, but from unknown others. Take science, for instance. What do you think you actually know? That the moon affects the tides? Something about space-time continuum or the exciting stuff about quantum mechanics? Or maybe the research on viruses and vaccines? Chances are whatever you know you have taken on trust – even, or particularly, if you are a reader of popular science books. This also applies to most scientists, since they usually only know what is going on in their own field of research. The range of things we call ‘science’ is simply too vast for anyone to have knowledge in any other way.

We are confronted by a series of fields of research, experimentation and application: complex and specialised fields that requires years of study and training to fully understand. As individuals, we cannot be experts in all scientific domains, which is why we typically rely on the knowledge and expertise of the scientific community, composed of experts from various fields who have the necessary background knowledge, experience, and expertise to evaluate scientific theories and data accurately.

Findings are published in peer-reviewed journals, so their work has been critically evaluated by other experts in their field. This process is supposed to ensure that their studies are methodologically sound, their results are valid and reliable, and that their conclusions are supported by the evidence. It doesn’t always work out like that, though, what with the way fallible people get things wrong, cover up or massage data, etc., and the way institutions and individuals tend to agree with whoever is funding them. And scientists and their popularisers can get caught up in fashions: Chaos Theory, Evolutionary Psychology etc. The ‘natural sciences’, though, are the most successful way of predicting and controlling the natural world we have so far contrived. We, as non-experts, rely on scientists themselves to do the evaluating. We trust in the scientific community’s collective knowledge and expertise to determine which theories are most probable and which should be discarded based on the available evidence. But it would be good for the layperson to know more about scientific method. Knowing, for instance, what a theory is, or how important ‘blind’ (randomised) trials are in research, might defend us from media claims about ‘what researchers have discovered’, ‘miracle’ cures and so on. An additional benefit would be knowing what the limits of science are, thus avoiding ‘scientism’: the belief that the investigative methods of the physical sciences are appropriate in all fields of inquiry.

The 90% Rule

There is no such thing as rational autonomy here. Yet scientists do change their minds. Theories get discarded as inadequate or are disproved. So the question arises about who to rely on. What about controversial or debatable claims? Some things don’t rate now as the knowledge we once thought we had (eg, the existence of phlogiston) or remain to be settled, like string theory or ‘many worlds’ in physics. Such developments are, of course, part and parcel of the successful progress of science. But the scope for the layman for making assessments is rather small. I would suggest the following rough and ready criterion: if about 90 % of scientists in the relevant scientific area come to agree on something, and keep on agreeing about it, I’m prepared to call it a fact, or true. So by that test, human caused climate warming is a scientific fact. Only a tiny fringe disputes it. Let’s not deny the importance of tiny fringes in keeping everyone on their toes, but let’s not overrate their importance either: until and unless they can come forward with enough evidence to move the vast majority of scientists on this issue, I’m sticking with the 90%.

And what applies to the physical sciences applies to the ‘human sciences’ too, to history, psychology and so on. Not everything is settled, but a surprising amount of it is agreed on. One relies, again, on the work of qualified others. Reason is a collective enterprise, and we outsource most of it. The best most of us can do is to understand the basics of the relevant methods used in the respective disciplines. What is appropriate for history won’t necessarily apply to biology. But what does apply across all fields and outside of them too, is the importance of critical thinking. This is a vital practice for all citizens, whatever their expertise or interests, and should include an awareness of the importance of independent corroboration, confirmation bias, classic errors in thinking like ‘post hoc, therefore propter hoc’ and more. Reliance on the scientific community, provided it is informed by a habit of critical reflection, is a better option than being a ‘free thinker’ who does her own research on YouTube. And it is the free thinker who is most often prone, I think, to that odd combination of gullibility and scepticism that leads to fantasies about UFOs, the ‘New World Order,’ or claptrap about Qanon.

God, etc.

What about religion? There too, very little generally has to do with the investigations of the individual thinker. While we should assess the claims made by religions and their spokespeople, belief and unbelief both have a lot to do with the social norms. Most people in England 400 years ago professed belief in the christian God: now less than half do, and a far fewer number go to any church. In contrast, the majority of US citizens profess belief in god and an after life. This isn’t due to the rise of philosophical reasoning in Kent rather than Kentucky. It is secularisation that has advanced further in the UK, not reason. The corrosive effect of capitalism and consumer culture has more to do with it than any number of tracts by atheists. Nor is the popularity of Christianity rather than Hinduism in the USA much to do with the careful examination of the pros and cons of the respective faiths. Where you were born and what your parents believe is rather more important. In some cases rational argument and careful thought will play a role, but on the whole, it doesn’t. And in any case one can overrate the importance of beliefs in what is a whole way of life, with its rituals and observances, rather than a philosophical position anyone has consciously adopted.

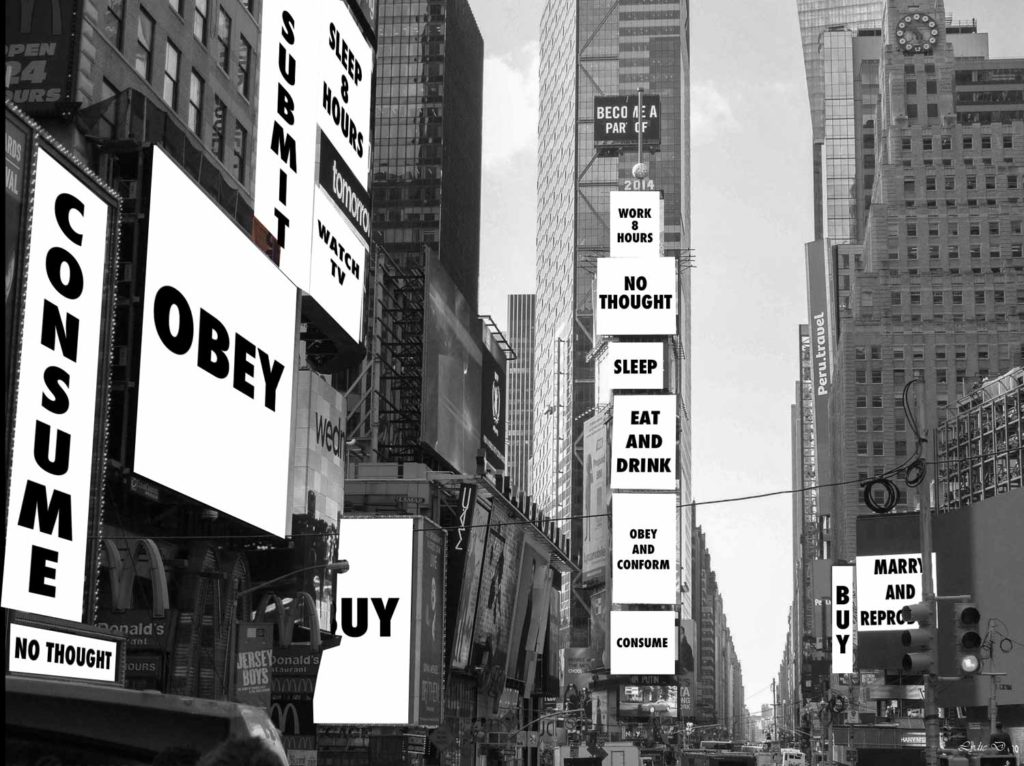

Ideology as a Form of Life

Further, the widespread acceptance of ‘Capitalist Realism’, the notion that there can be no alternative to our current economic system, isn’t based on an careful discussion of the merits of Adam Smith versus Karl Marx, or Hayek versus Polanyi, but on what most others seem to be doing. Ideology itself relies less on beliefs and opinions, but more on the routines that are regularly performed. Commodity fetishism, too, is a form of life. We may express cynical distrust or doubt in ‘The Market’ but our actions keep the show on the road. Presumably this will go on unless and until something jolts us out of our daily round: like the ATMs ceasing to pay out cash, for instance. Perhaps that day will come. But until it does, we will go on as we always have. Events radicalise people more than seminars ever do. To paraphrase Jonathan Swift, you can’t reason a person out of something they weren’t reasoned into in the first place.