Steven Johnson in the New York Times:

Inside one of the buildings lies a wonder of modern technology: 285,000 CPU cores yoked together into one giant supercomputer, powered by solar arrays and cooled by industrial fans. The machines never sleep: Every second of every day, they churn through innumerable calculations, using state-of-the-art techniques in machine intelligence that go by names like ‘‘stochastic gradient descent’’ and ‘‘convolutional neural networks.’’ The whole system is believed to be one of the most powerful supercomputers on the planet.

Inside one of the buildings lies a wonder of modern technology: 285,000 CPU cores yoked together into one giant supercomputer, powered by solar arrays and cooled by industrial fans. The machines never sleep: Every second of every day, they churn through innumerable calculations, using state-of-the-art techniques in machine intelligence that go by names like ‘‘stochastic gradient descent’’ and ‘‘convolutional neural networks.’’ The whole system is believed to be one of the most powerful supercomputers on the planet.

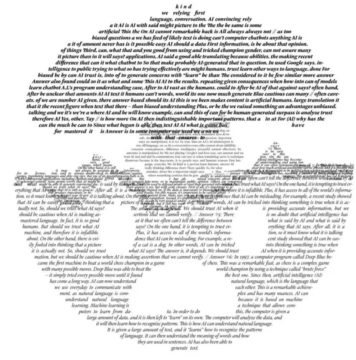

And what, you may ask, is this computational dynamo doing with all these prodigious resources? Mostly, it is playing a kind of game, over and over again, billions of times a second. And the game is called: Guess what the missing word is.

The supercomputer complex in Iowa is running a program created by OpenAI, an organization established in late 2015 by a handful of Silicon Valley luminaries, including Elon Musk; Greg Brockman, who until recently had been chief technology officer of the e-payment juggernaut Stripe; and Sam Altman, at the time the president of the start-up incubator Y Combinator. In its first few years, as it built up its programming brain trust, OpenAI’s technical achievements were mostly overshadowed by the star power of its founders. But that changed in summer 2020, when OpenAI began offering limited access to a new program called Generative Pre-Trained Transformer 3, colloquially referred to as GPT-3. Though the platform was initially available to only a small handful of developers, examples of GPT-3’s uncanny prowess with language — and at least the illusion of cognition — began to circulate across the web and through social media.

More here.