by Charlie Huenemann

Suppose you are Father God, or Mother Nature, or Mother God, or Father Nature — doesn’t matter — and you want to raise up a crop of mammals who can reason well about what’s true. At first you think, “No problem! I’ll just ex nihilo some up in a jiffy!” but then you remember that you have resolved to build everything through the painstaking process of evolution by natural selection, which requires small random shifts over time, with every step toward your target resulting in some sort of reproductive advantage for the mammal in question. Okay; this is going to be hard.

Suppose you are Father God, or Mother Nature, or Mother God, or Father Nature — doesn’t matter — and you want to raise up a crop of mammals who can reason well about what’s true. At first you think, “No problem! I’ll just ex nihilo some up in a jiffy!” but then you remember that you have resolved to build everything through the painstaking process of evolution by natural selection, which requires small random shifts over time, with every step toward your target resulting in some sort of reproductive advantage for the mammal in question. Okay; this is going to be hard.

Given what you know about reasoning and truth, the mammal is going to have to have access to some way of abstractly representing the world to itself, or language. That in turn will require a community of language users; and that will require a community of beings who fare better through cooperation. This immediately raises the problem of how to evolve beings who are both selfish and social. Selfishness requires cheating whenever you can get away with it, but sociability requires trustworthiness. Striking a workable balance between selfishness and sociability is tricky, but not impossible, as anyone who has worked in corporate knows.

As if that tension isn’t hard enough: if what you want in the end are mammals who can reason well about what’s true, that raises even more problems. Reasoning well about what is true profiteth a mammal little. It does not reliably help a mammal in its selfish strivings, since it is often better for the mammal to believe all sorts of falsehoods that give it undue confidence (hope), or steer it clear of dangers (superstition), or hate its competitors (envy and jealousy). Nor does reasoning well about what is true help the mammal in terms of sociability, since a mammal is often more sociable by being stubbornly loyal to its clan (tribalism), or by taking on risks or burdens on behalf of the group (altruism), or caring for those who offer it no advantage (selfless love). Selfishness, sociability, and rationality: pick two, but you can’t have all three.

Given this predicament, we will have to give up on the original goal and settle for a next-best alternative, which is to create groups that reason well about what’s true, instead of individuals. This we can do. We first have to make sure our mammals have different opinions about what’s true. (Good news: this turns out to be easy to achieve.) Second, we have to have them talk to one another (already done). Third, we have to have them want to persuade one another to adopt the persuader’s opinion. This seems to accord pretty well with selfishness. But what counts as “acceptable means of persuasion” will have to be tempered somewhat to meet the requirements of sociability: if we allow for threats of force or bullying then we place our group’s cohesion at risk. So in the end we will need to establish social standards which tell us when we should or shouldn’t change our minds, on the basis of the claims our fellow mammals make. We can identify these standards as reason, near enough.

What we will get in the end are mammals who do not reason very well on their own — they fall into hope, superstition, envy, and other familiar mammalian foibles — but who reason exceptionally well in groups. Mammal A puts forward an opinion, only to be challenged by mammal B, with mammals C and D looking on, and a great many words are exchanged; in the end the group settles on an opinion which may be A’s, or someone else’s, or a new one that no one had considered before. But there begins to emerge a sense of what is right to conclude, independently of any individual’s opinion. We have at least a social process that functions in the way that “reason” is supposed to, even if we haven’t established that what counts as “right to conclude” is exactly the same as reasoning well about what is true.

To secure this last connection between what’s true and what’s right to conclude, we might simply stand back and let the school of hard knocks do its work. Groups and cultures and languages evolve no less than creatures do. Even if reasoning well about what is true does not necessarily benefit an individual, it will benefit the larger group, since what allows the group to reproduce itself will be its overall fitness in engaging with its environment. The group will need to know where to spend its winters, how much food to store, where to find it, which other groups to avoid, and so on. That will require that the group’s social standards for when one should or shouldn’t change one’s mind will have to track what’s true at least to some minimal degree. At least, what the group ends up believing will have to parallel what is true: the group may conclude the sun god blesses plants only when it ascends to a certain height in the sky, so long as it gets the group to plant crops at the right time.

This overall engineering of mammalian reason is the account Hugo Mercier and Dan Sperber offer in their 2017 book, The Enigma of Reason. The “enigma” they seek to explain is how humans are both fantastic and lousy reasoners. Humans, as we know, have calculus and the standard model and economic strategies and chess; but they also buy lottery tickets and adopt conspiracy theories and fail at all sorts of tasks set by crafty psychologists. Mercier and Sperber argue that we need to think of reasoning as a group project rather than as an oracle housed in each brain. What each brain can do is push for its own opinion and spew out reasons for believing its own opinion is true (that’s confirmation bias, familiar to us all). What other brains can provide are reasons for thinking our theories are not true; and as our brains hash things out with one another, we are often compelled to change our minds, and typically for the better.

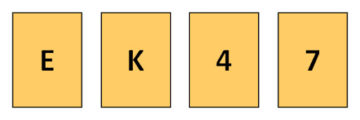

The Wason selection test provides a vivid example of the difference between individual reasoning and reasoning in groups. Suppose we have four cards with letters on one side and numbers on the other. People are famously bad at getting the right answer to the question, “Which card(s) do you need to turn over in order to determine whether any card with an E on one side has a 7 on the other?”

Less than 20% of individuals select the right answer. But if you instead pose the problems to groups of people, more than half of the groups land on the right answer. They argue about it, and people come to appreciate the reasons that are offered and change their minds.

Two interesting consequences follow from this view of reasoning as a social project. The first is that the individualistic ideal of human beings as free and independent judges of what is reasonable and true does not accord well with what we know ourselves to be. We need each other in order to reason well; the “rational, self-interested individual” is a loser. The second is that even if we have very good reasons to despair over the rationality of individual human beings, we should not conclude that arguing with them is a waste of time. For their sake, as well as ours, we need to engage with their reasons, and hash things out. It’s the best strategy available to us.