by Paul Orlando

I have a friend who is a self-described “cop magnet.” He’s been arrested six or seven times, just standing there.

I have a friend who is a self-described “cop magnet.” He’s been arrested six or seven times, just standing there.

I understand why. He looks like trouble. A propensity for wearing all black clothing. A shaved head and a full beard. Multiple tattoos; never smiles. He’s a gentle and intellectual person, but you’d never guess from looking at him.

It’s an undesirable problem to have, looking guilty. There was even a case of a man in Taiwan, who similar to my friend, looked so much like a potential (not actual) Triad member (with the expected police reaction), that he had plastic surgery to make himself look nicer. My friend deals with law enforcement run-ins by avoiding signs of aggression and keeping his eyes down. That being said, his techniques obviously don’t work perfectly when it comes to a police officer’s presumptions.

As we move to an artificial intelligence-fueled world, including in law enforcement, what will change? Is AI in law enforcement presumption that you can blame on something else?

This may be especially relevant as more attention is paid to differences in policing people of different races (and later I assume income, education, and other characteristics). I wonder how technology will be applied to solve questions of fairness. Or how target metrics and collected statistics will be used to discover, hide, or create problems.

Interestingly, using more tech can enable presumptive guilt. Someone looks like other people who have caused problems, are deemed more likely to cause problems themselves, and then are presumptively dealt with. Will additional attention now paid to racial profiling make a long-term difference or will it just shift the process from people to machines?

The other side of this is statistical guilt. Statistical guilt is what we might see coming out of recent protests that included looting. There are thousands of videos and images of people participating. Most of them peaceful, but a small number were destructive and violent. Many videos include clear visuals on people’s faces while they steal items from stores. They aren’t wearing name badges, but given facial recognition and enough analysis, could you identify someone with enough accuracy to charge them with a crime? As in, this looks 93% like you, so you’re (statistically 93%) guilty?

Tereza’s Inevitable Grandchild

In Milan Kundera’s book The Unbearable Lightness of Being, one of the characters, Tereza, is a photographer. Her photographs of protests during the Prague Spring at first help tell the story to an international audience, but then allow security forces to identify participants. That was back when such a task would be done entirely by humans.

The task can now be automated. The tech has become cheap enough and available enough to build your own kit. There have even been advances in facial recognition that can identify individuals wearing face masks. And ironically, it is video of acts like George Floyd’s death that may lead to a call for more surveillance.

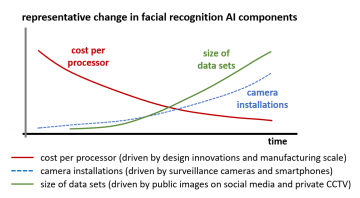

Greater computing power, a large base of networked cameras (many of which are phones), large image datasets, and the need to innovate on personal identification made facial recognition technology inevitable. But it’s the next steps of matching personal identity with past actions and then passing that information to an authoritative group that makes the difference. Otherwise you just have a different way to advertise to potential customers.

I became interested in the inevitability of certain outcomes, especially ones enabled by technological innovation where there is a predictable nature to the speed of change. This is where new business models emerge to support activity that formerly would have been impossible at scale.

Once rare, there are many static images and videos of people today, both publicly posted and privately accessible from CCTV feeds. And recently in the US, there are many videos of people behaving badly, including those who filmed and shared the content themselves, providing a view that drones, fixed position cameras, and other surveillance tech does not yet have. Even with many participants wearing masks, enough did not wear masks. Or, as described above, their partial masks may not prevent identification anyway.

So what happens with these pictures and videos of people looting? The FBI has put out a call “Seeking Information on Individuals Inciting Violence During First Amendment-Protected Peaceful Demonstrations” so I figure that any publicly available images are already being collected and will be analyzed.

Tereza’s inevitable grandchild incriminates the next generation and at scale. As in Kundera’s book, this is an unintended consequence of protests today. And as with the Prague Spring, the amount worry depends on where in the world you are.

Partial Pause

There are many companies that produce AI image surveillance and analysis hardware and software today, ranging from Huawei, Hikvision, NEC, Amazon, Cisco, IBM, Microsoft, Palantir, and others.

There is also a group of US companies that have paused their involvement in facial recognition development and product availability, including Amazon, Microsoft, and IBM. But I wonder how long this pause lasts or how much it matters. Will customers of this tech just go to other suppliers? For example, over 600 law enforcement agencies have been using Clearview — also a US company, but a startup — which provides facial recognition software using a data set scraped from social media.

The topic raises many questions that the large companies above would rather avoid. A startup like Clearview, however, can thrive on these questions. And while the technique that Clearview used to gather its data set is a terms of service violation of the social networks, those images were just there for the taking.

Also, while large US companies may pause their facial recognition technology investments, those from other countries may not. Even if the nation itself prohibits this tech because of unintended consequences, other states certainly support it.

Laws and perspectives change. What is unacceptable today may become acceptable tomorrow. With large image records, we may see just that.

But the technology to identify large numbers of faces must eventually exist, however people use it.

A Recent Example… That Is Not an Example

Combine facial recognition AI with a bad human process and you get a well-publicized recent false arrest. Robert Julian-Borchak Williams was arrested earlier in 2020 on suspicion of a watch theft. The timeline is interesting. The theft occurred in October 2018, facial recognition identified Williams as the suspect in March 2019, police started to investigate in July 2019, and didn’t act until January 2020.

In Williams’ case, he was also picked out of a lineup by the store’s security guard. It’s just that the security guard had not witnessed the crime. Even if used properly, the idea to produce a lineup of individuals who each look like a targeted criminal, would perhaps produce even less reliable eyewitness selections.

What made Williams’ case a national story was not his false arrest, but rather the use of AI to identify him from a security video. The haphazard human process was mostly not reported. If Williams had been misidentified purely by eyewitnesses with the same faulty policing process we never would have heard about it.

I worry that a simple call to defund the police does not improve that situation. With less of a budget, do organizations tend to rely more or less on automation? Especially as it is the technology that gets cheaper over time, not the people.

The needed installed base of cameras is already there pretty broadly, even without smartphones. The top 20 cities for CCTV cameras per person includes London, Atlanta, Singapore, Chicago, Sydney, Berlin, and New Delhi. Nine others are cities in China (perhaps the best innovator but worst user of the tech today), two are in UAE, Baghdad, and Moscow. (If anything, the list probably over represents less densely populated cities, which need more cameras per person to do the same job.)

After the initial distaste wears off, and perhaps initiated by a future crisis, one could imagine a counter-proposal in favor of more facial recognition AI which depends on even greater data sharing. Just mandate the sharing of additional data across jurisdictions and perhaps the problems disappear in theory (while other problems may be created in practice).

One’s privacy may be disrupted by an individual, but it has historically been impossible to do this at scale across many contexts. We are uncomfortable with this eventuality because it is so at odds with the experience of previous generations as well as many around the world today. We are uncomfortable because examples of misuse are so numerous. The city (formerly the place for anonymity) now temporarily cedes that status to the countryside (the place where people knew everyone else).

If a process is inevitable, the speed of its growth is the only thing to fight about.