by Daniel Ranard

You must have seen the iconic image of the blue-white earth, perfectly round against the black of space. How did NASA produce the famous “Blue Marble” image? Actually, Harrison Schmitt just snapped a photo on his 70mm Hasselblad. Or maybe it was his buddy Eugene Cernan, or Ron Evans – their accounts differ – but the magic behind the shot was only location, location, location: they were aboard the Apollo 17 in 1972.

Since then, NASA has produced images increasingly strange and absorbing. Take a look at the “Pillars of Creation” if you never have. Click it, the inline image won’t do. That’s from the Hubble telescope, not a Hasselblad handheld.

Meanwhile, this week an international collaboration gave us the world’s first photograph of a black hole. The image appears diminutive, nearly abstract. It’s no blue marble; it offers no sense of scale. Though we may obtain better images soon, the first image has its own allure. The photograph is appropriately intangible, desiring of context, not quite structureless.

Maybe “photograph” is a stretch. For one, the choice of orange is purely aesthetic. The actual light captured on earth was at radio wavelengths (radio waves are a type of light), much longer than the eye can see. The orange-yellow variations depicted in the image do not correspond to color at all: scientists simply colored the brighter emissions yellow, the dimmer ones orange. Besides, even if we could see light at radio wavelengths, no one person could see the black hole as pictured. Multiple telescopes collected the light thousands of kilometers apart, and sophisticated algorithms reconstructed the final image by interpolating between sparse data points.

Should we marvel at this image as a photograph? Or is it a more abstract visualization of scientific data? First let’s ask what it means to see a thing, at least usually.

Maybe seeing seems easy. You open your eyes, and the world washes in, somehow imprinting itself. But it’s harder than it seems. Put aside the miracle of cognition and understanding. First, a smaller miracle occurs. From all the light scattered through our environment, a portion is collected and arranged upon our retina, a reproduction of the world in miniature.

If you’re an astronomer, or an ophthalmologist, or anyone who has developed film, this miracle is familiar but worth appreciating. Say you want to capture the first ordinary photograph. How do you transmute the world onto print? Imagine you have a piece of special white paper, covered with special white dots. When you snap your fingers, each dot changes color to match the color of the light that strikes it, and the color freezes.

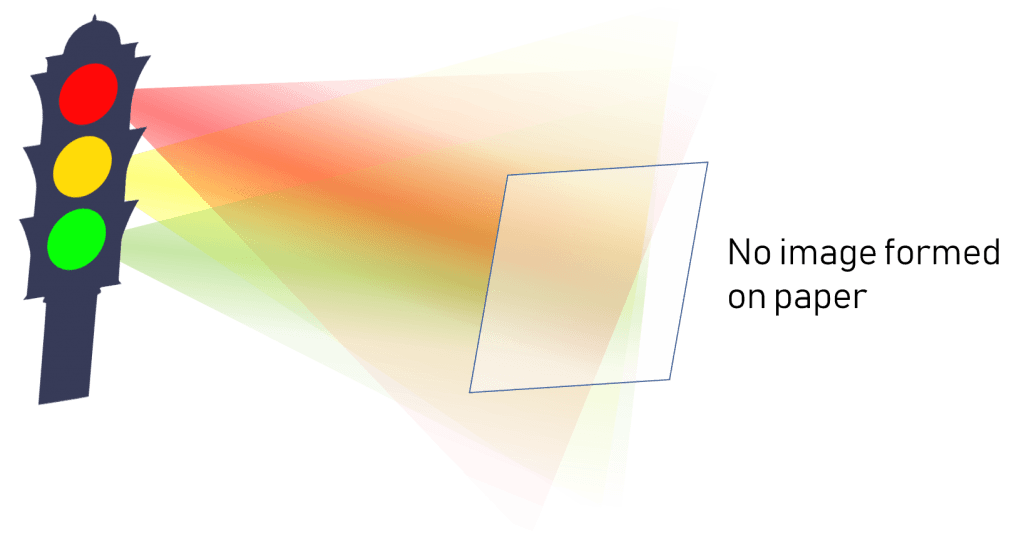

Can you take a picture yet? You hold the paper out on a bright day and snap your fingers. What happens? Actually, not much. Say you stand in front of a traffic signal. The red, yellow, or green of the traffic light shines onto the paper. But the sun shines too, and the scattered light from the pavement, and the blue scattered from the sky—it all washes onto the paper. When you snap your fingers, the paper freezes white. Nothing distinguishes traffic signal from pavement from sky.

If the traffic light were green, and you held the paper close enough, your snap might freeze it a pale green. Still, no recognizable image of the traffic signal appears. The paper freezes just as you see it: white, homogeneous, multiply illuminated. We are already familiar with the fact that an ordinary piece of paper does not have an image of the world formed upon it. Light from the sun scatters and flies – it does not arrange itself on paper to mimic the spatial arrangement of the scene in front.

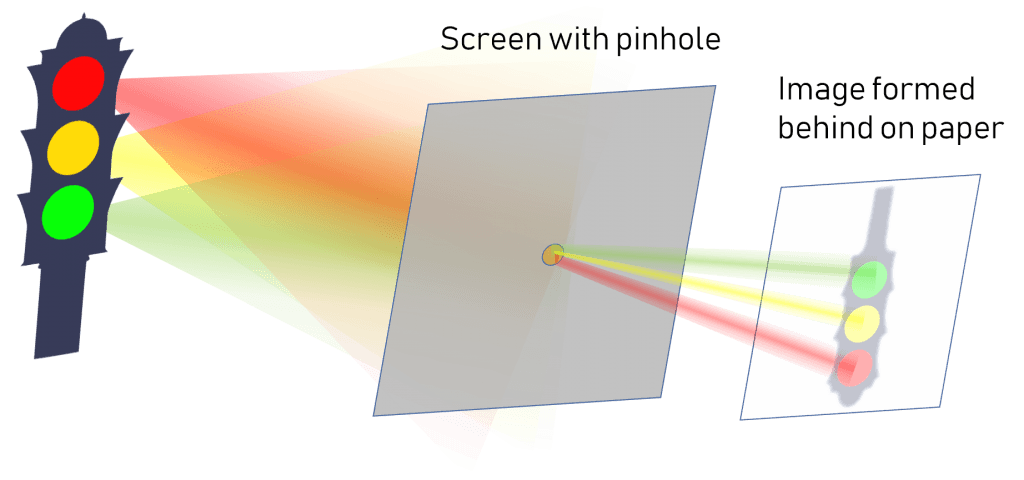

How do we capture images then, given this special paper? Of course, the paper is like camera film (or like the arrays of sensors in our phone cameras, or the photoreceptive cells in our retinas). But we have seen that even with a recording device like our special paper, the challenge remains of forming an image to record. The oldest, simplest method of forming images is to use a pinhole: you place an opaque screen in front of the paper, obscuring all light from the scene except the light passing through a small “pinhole” cut in the screen. The pinhole solves the dilemma of the paper and the traffic signal. Rather than mixing to illuminate the entire paper, each of the red, yellow, and green signals illuminates a separate region of the paper, casting an inverted image. The effect works identically whether the traffic signal produces its own light or merely scatters light from another source, like the sun.

The pinhole method works, and it’s probably how the eyes of our nonhuman ancestors formed the first creature-made images. Light entered a pinhole opening in the eye and formed an image on light-sensitive tissue. But the pinhole method of forming images has at least one major downside: the pinhole obscures most of the light, so it’s especially hard to form images in near darkness (let alone faint images from distant galaxies). When light is scarce, you want to use all the light you can gather. You might try a larger pinhole, but the image blurs and disappears as you enlarge the hole. One solution is to use a larger hole filled with a transparent substance, bending and redirecting the light in a precise way so as to form an image nonetheless. That’s how camera lenses work (when you fill the hole with curved glass) and how our eyes work (when you fill the hole with with transparent proteins). Pit vipers, for various reasons, have both two pinhole eyes and two lens-based eyes. Meanwhile, telescopes primarily use mirrors, rather than lenses.

There are lots of ways to form an image, but nature rarely forms them for us. Instead, we redirect light rays, bend and capture them, arrange them into a facsimile. The first black hole photograph from the Event Horizon Telescope is no exception. The “telescope” in question is really a network of telescopes, at sites across the earth. They collect and record light from their separate locations, continually changing as the earth spins.

An algorithm combines these fragments of information to form the final image. You can watch Katie Bouman give a lucid explanation of the algorithm she and her team developed. In the end, maybe the image should be understood as more of a map than a photograph. In particular, it’s a painstakingly reconstructed map of where the strongest radio emissions originate from a special region of the M87 galaxy. The map is complicated by the fact that the light emitted from near the black hole is also bent by the black hole. And the orange and yellow, remember, are just for fun. Still, it’s not so different from the images we construct every day. We go on capturing light, re-arranging it into representations we find comprehensible.