Azeem Azhar at Exponential View:

In the corridors of Silicon Valley’s most secretive AI labs, a quiet revolution is unfolding. Headlines scream of stalled progress, insiders know something the market hasn’t caught up to yet: the $1 trillion bet on AI isn’t failing—it’s transforming.

In the corridors of Silicon Valley’s most secretive AI labs, a quiet revolution is unfolding. Headlines scream of stalled progress, insiders know something the market hasn’t caught up to yet: the $1 trillion bet on AI isn’t failing—it’s transforming.

The real story? AI’s most powerful players are secretly shifting away from purely brute-force scaling that defined the last decade. Instead, they’re also pursuing a breakthrough approach that could continue to deliver.

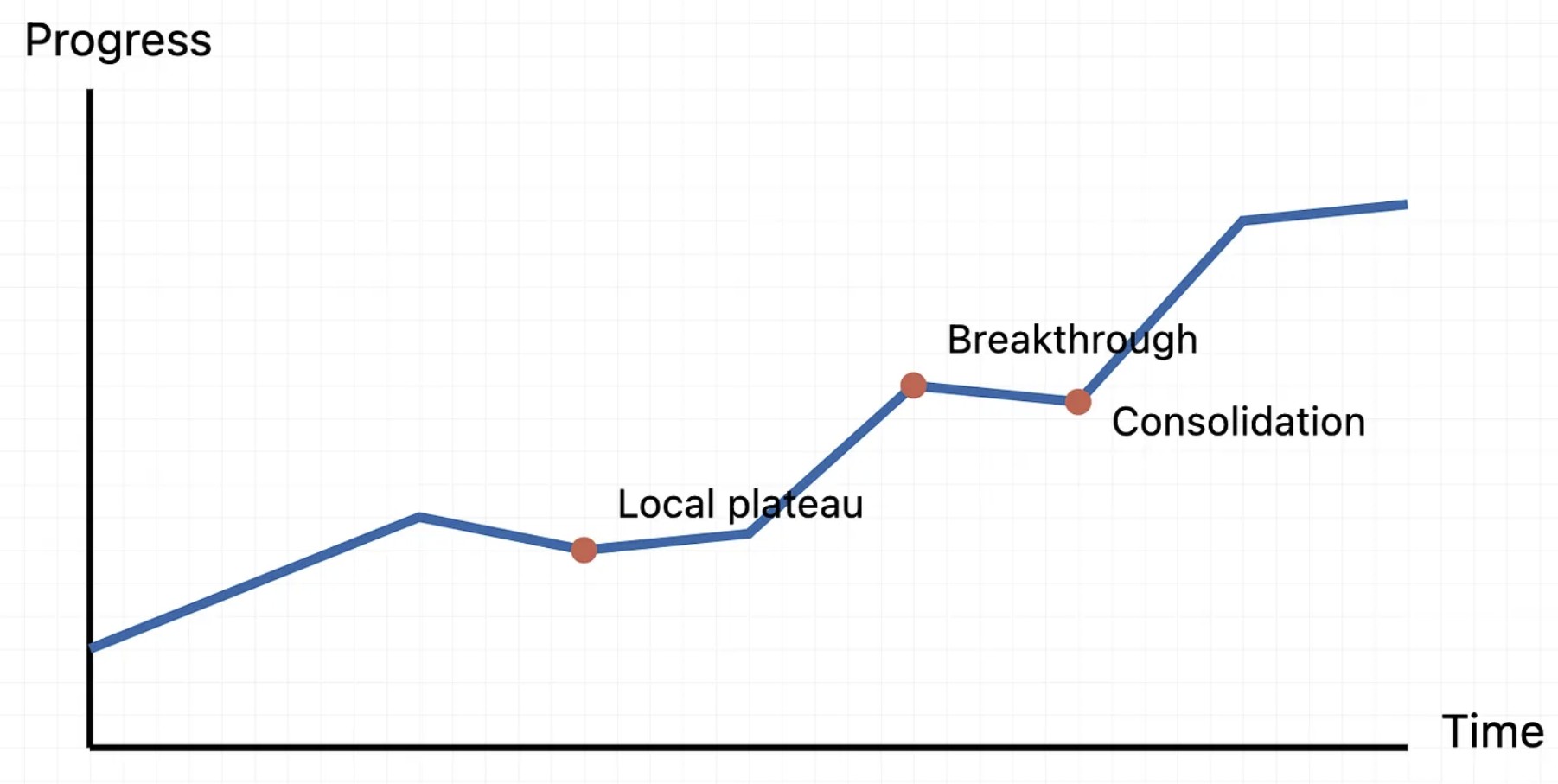

The strategy of using scale by training ever larger models on greater training data, is yielding some diminishing returns. Here’s The Information

Some researchers at the [OpenAI] believe Orion, [the latest model], isn’t reliably better than its predecessor in handling certain tasks, according to the employees. Orion performs better at language tasks but may not outperform previous models at tasks such as coding.

Google’s upcoming iteration of its Gemini software is said to not meet internal expectations. Even Ilya Sutskever, OpenAI’s chief scientist, has remarked that scaling has plateaued

The 2010s were the age of scaling; now we’re back in the age of wonder and discovery once again.

If this is true, does this mean the trillon-dollar bet on bigger and bigger AI systems is coming off the rails?

More here.

Enjoying the content on 3QD? Help keep us going by donating now.