Steve Taylor in Psychology Today:

You might feel that you have the ability to make choices, decisions and plans – and the freedom to change your mind at any point if you so desire – but many psychologists and scientists would tell you that this is an illusion. The denial of free will is one of the major principles of the materialist worldview that dominates secular western culture. Materialism is the view that only the physical stuff of the world – atoms and molecules and the objects and beings that they constitute – are real. Consciousness and mental phenomena can be explained in terms of neurological processes.

You might feel that you have the ability to make choices, decisions and plans – and the freedom to change your mind at any point if you so desire – but many psychologists and scientists would tell you that this is an illusion. The denial of free will is one of the major principles of the materialist worldview that dominates secular western culture. Materialism is the view that only the physical stuff of the world – atoms and molecules and the objects and beings that they constitute – are real. Consciousness and mental phenomena can be explained in terms of neurological processes.

Materialism developed as a philosophy in the second half of the nineteenth century, as the influence of religion waned. And right from the start, materialists realised from the start the denial of free will was inherent in their philosophy. As one of the most fervent early materialists, T.H. Huxley, stated in 1874, “Volitions do not enter into the chain of causation…The feeling that we call volition is not the cause of a voluntary act, but the symbol of that state of the brain which is the immediate cause.” Here Huxley anticipated the ideas of some modern materialists – such as the psychologist Daniel Wegner – who claim that free will is literally a “trick of the mind.” According to Wegner, “The experience of willing an act arises from interpreting one’s thought as the cause of the act.” In other words, our sense of making choices or decisions is just an awareness of what the brain has already decided for us.

More here.

Billions of years ago, life crossed a threshold. Single cells started to band together, and a world of formless, unicellular life was on course to evolve into the riot of shapes and functions of multicellular life today, from ants to pear trees to people. It’s a transition as momentous as any in the history of life, and until recently we had no idea how it happened.

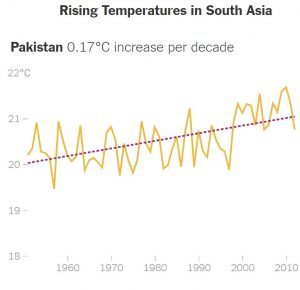

Billions of years ago, life crossed a threshold. Single cells started to band together, and a world of formless, unicellular life was on course to evolve into the riot of shapes and functions of multicellular life today, from ants to pear trees to people. It’s a transition as momentous as any in the history of life, and until recently we had no idea how it happened. Climate change could sharply diminish living conditions for up to 800 million people in South Asia, a region that is already home to some of the world’s poorest and hungriest people, if nothing is done to reduce global greenhouse gas emissions,

Climate change could sharply diminish living conditions for up to 800 million people in South Asia, a region that is already home to some of the world’s poorest and hungriest people, if nothing is done to reduce global greenhouse gas emissions, What did I do to deserve a yelling at from the famously curmudgeonly and irascible Harlan Ellison? Well, from 2010 to 2013, I was the president of SFWA, the Science Fiction and Fantasy Writers of America, an organization to which Harlan belonged and which made him one of its Grand Masters in 2006. Harlan believed that as a Grand Master I was obliged to take his call whenever he felt like calling, which was usually late in the evening, as I was Eastern time and he was on Pacific time. So some time between 11 p.m. and 1 a.m., the phone would ring, and “It’s Harlan” would rumble across the wires, and then for the next 30 or so minutes, Harlan Ellison would expound on whatever it was he had a wart on his fanny about, which was sometimes about SFWA-related business, and sometimes just life in general.

What did I do to deserve a yelling at from the famously curmudgeonly and irascible Harlan Ellison? Well, from 2010 to 2013, I was the president of SFWA, the Science Fiction and Fantasy Writers of America, an organization to which Harlan belonged and which made him one of its Grand Masters in 2006. Harlan believed that as a Grand Master I was obliged to take his call whenever he felt like calling, which was usually late in the evening, as I was Eastern time and he was on Pacific time. So some time between 11 p.m. and 1 a.m., the phone would ring, and “It’s Harlan” would rumble across the wires, and then for the next 30 or so minutes, Harlan Ellison would expound on whatever it was he had a wart on his fanny about, which was sometimes about SFWA-related business, and sometimes just life in general. F

F Moon Brow is a story of lust, love, and loss set during three periods of time: Iran’s revolution, the post-revolution and Eight Years War with Iraq, and the post-war era. An Eight Years War veteran, Amir Yamini, who formerly drowned himself in sex and alcohol, is discovered in a hospital for shell-shock victims by his mother and his sister Reyhaneh, having languished there for five years. Suffering from mental injuries caused by the war, Amir is haunted by a woman in his dreams that he calls “Moon Brow” because he can’t see her face. Amir’s attempt to seek the truth of his past brings him to his old friend, Kaveh, who might know what happened in Amir’s past life. The search for a woman he truly loved before going to war takes him to where he lost his left arm—and his wedding ring—during the war. Amir’s relationship with his sister Reyhaneh is one of the best parts of the novel—a true companion, Reyhaneh helps Amir discover the truth of his life before the war. Moon Brow combines Amir’s journey into his past life with the history of Iran, and also it shares the trauma of war as it reveals the victims of Saddam Hussein’s genocidal ideology.

Moon Brow is a story of lust, love, and loss set during three periods of time: Iran’s revolution, the post-revolution and Eight Years War with Iraq, and the post-war era. An Eight Years War veteran, Amir Yamini, who formerly drowned himself in sex and alcohol, is discovered in a hospital for shell-shock victims by his mother and his sister Reyhaneh, having languished there for five years. Suffering from mental injuries caused by the war, Amir is haunted by a woman in his dreams that he calls “Moon Brow” because he can’t see her face. Amir’s attempt to seek the truth of his past brings him to his old friend, Kaveh, who might know what happened in Amir’s past life. The search for a woman he truly loved before going to war takes him to where he lost his left arm—and his wedding ring—during the war. Amir’s relationship with his sister Reyhaneh is one of the best parts of the novel—a true companion, Reyhaneh helps Amir discover the truth of his life before the war. Moon Brow combines Amir’s journey into his past life with the history of Iran, and also it shares the trauma of war as it reveals the victims of Saddam Hussein’s genocidal ideology. I’m asking myself about double standards a lot lately, in public life and also in science. I’m particularly concerned about double standards in science whereby women’s issues are viewed differently than men’s. We’ve lagged behind in important ways because there is a concern that if we have a biological explanation for women’s behavior, it will smash women up against the glass ceiling, whereas a biological explanation for men’s behavior doesn’t do such a thing. So, we’ve been freer in biomedical science to explore questions about the biological foundations of men’s behavior and less free to explore those questions about women’s behavior. That’s a problem that manifests itself in the lag behind what we understand about men and what we understand about women.

I’m asking myself about double standards a lot lately, in public life and also in science. I’m particularly concerned about double standards in science whereby women’s issues are viewed differently than men’s. We’ve lagged behind in important ways because there is a concern that if we have a biological explanation for women’s behavior, it will smash women up against the glass ceiling, whereas a biological explanation for men’s behavior doesn’t do such a thing. So, we’ve been freer in biomedical science to explore questions about the biological foundations of men’s behavior and less free to explore those questions about women’s behavior. That’s a problem that manifests itself in the lag behind what we understand about men and what we understand about women. In the 1970s, Shulamith Firestone

In the 1970s, Shulamith Firestone  In his 1946 classic essay ‘Politics and the English language’, George Orwell argued that “if thought corrupts language, language can also corrupt thought”. Can the same be said for science — that the misuse and misapplication of language could corrupt research? Two neuroscientists believe that it can. In an intriguing paper published in the Journal of Neurogenetics, the duo claims that muddled phrasing in biology leads to muddled thought and, worse, flawed conclusions.

In his 1946 classic essay ‘Politics and the English language’, George Orwell argued that “if thought corrupts language, language can also corrupt thought”. Can the same be said for science — that the misuse and misapplication of language could corrupt research? Two neuroscientists believe that it can. In an intriguing paper published in the Journal of Neurogenetics, the duo claims that muddled phrasing in biology leads to muddled thought and, worse, flawed conclusions. THIRTY YEARS AGO, while the Midwest withered in massive drought and East Coast temperatures exceeded 100 degrees Fahrenheit, I testified to the Senate as a senior NASA scientist about climate change. I said that ongoing global warming was outside the range of natural variability and it could be attributed, with high confidence, to human activity — mainly from the spewing of carbon dioxide and other heat-trapping gases into the atmosphere. “It’s time to stop waffling so much and say that the evidence is pretty strong that the greenhouse effect is here,” I said.

THIRTY YEARS AGO, while the Midwest withered in massive drought and East Coast temperatures exceeded 100 degrees Fahrenheit, I testified to the Senate as a senior NASA scientist about climate change. I said that ongoing global warming was outside the range of natural variability and it could be attributed, with high confidence, to human activity — mainly from the spewing of carbon dioxide and other heat-trapping gases into the atmosphere. “It’s time to stop waffling so much and say that the evidence is pretty strong that the greenhouse effect is here,” I said. By several measures, including rates of poverty and violence, progress is an international reality. Why, then, do so many of us believe otherwise?

By several measures, including rates of poverty and violence, progress is an international reality. Why, then, do so many of us believe otherwise? If the names of these Black Oberlinites are unfamiliar, I suspect it is with good reason: we do not know how to talk about them. Over the course of my life I have learned that to be black and a classical musician is to be considered a contradiction. After hearing that I was a music major, a TSA agent asked me if I was studying jazz. One summer in Bayreuth, a white German businessman asked me what I was doing in his town. Upon hearing that I was researching the history of Wagner’s opera house, he remarked, “But you look like you’re from Africa.” After I gushed about Mahler’s Fifth Symphony, someone once told me that I wasn’t “really black.” All too often, black artistic activities can only be recognized in “black” arts.

If the names of these Black Oberlinites are unfamiliar, I suspect it is with good reason: we do not know how to talk about them. Over the course of my life I have learned that to be black and a classical musician is to be considered a contradiction. After hearing that I was a music major, a TSA agent asked me if I was studying jazz. One summer in Bayreuth, a white German businessman asked me what I was doing in his town. Upon hearing that I was researching the history of Wagner’s opera house, he remarked, “But you look like you’re from Africa.” After I gushed about Mahler’s Fifth Symphony, someone once told me that I wasn’t “really black.” All too often, black artistic activities can only be recognized in “black” arts. Last week, the philosopher Stanley Cavell died. His contributions to human thought are vast and rich; his subjects range from the intricacies of human language to the nature of skill. But one of Cavell’s best-known books is also, at least at first glance, his most frivolous: Pursuits of Happiness. In this book, originally published in 1981, Cavell claims that what he calls “comedies of remarriage”—Hollywood comedies from the 1930s and 40s that share a set of genre conventions (borderline farcical cons, absent mothers, weaponized erotic dialogue), actors (Cary Grant, Clark Gable, Katharine Hepburn, Barbara Stanwyck), and settings (Connecticut)—are the inheritors of the tradition of Shakespearean comedy and romance, and that they constitute a philosophically significant body of work.

Last week, the philosopher Stanley Cavell died. His contributions to human thought are vast and rich; his subjects range from the intricacies of human language to the nature of skill. But one of Cavell’s best-known books is also, at least at first glance, his most frivolous: Pursuits of Happiness. In this book, originally published in 1981, Cavell claims that what he calls “comedies of remarriage”—Hollywood comedies from the 1930s and 40s that share a set of genre conventions (borderline farcical cons, absent mothers, weaponized erotic dialogue), actors (Cary Grant, Clark Gable, Katharine Hepburn, Barbara Stanwyck), and settings (Connecticut)—are the inheritors of the tradition of Shakespearean comedy and romance, and that they constitute a philosophically significant body of work.

Visitors to Pyongyang, North Korea’s capital, often report feeling as though they have landed in a Truman-Show-type setup, unable to tell whether what they see is real or put there for their benefit, to be cleared away like props on a stage once they have moved on. The recent transformation of Kim Jong Un, the country’s dictator, from recluse to smooth-talking statesman has heightened interest in the country but not really shaken the fundamental sense of bewilderment when trying to make sense of it. Oliver Wainwright, the Guardian’s architecture critic (and the brother of The Economist’s Britain editor), who has compiled his photographs from a week-long visit to Pyongyang in 2015 into a glossy coffee-table book published by Taschen, starts from this initial sense of strangeness. He describes wandering around Pyongyang as moving through a series of stage sets from North Korean socialist-realist operas, where every view is carefully arranged to show off yet another monument or apartment building. But his eye is also alive to what the city, which was originally planned by a Soviet-trained architect, has in common with other places that were influenced by Soviet aesthetics.

Visitors to Pyongyang, North Korea’s capital, often report feeling as though they have landed in a Truman-Show-type setup, unable to tell whether what they see is real or put there for their benefit, to be cleared away like props on a stage once they have moved on. The recent transformation of Kim Jong Un, the country’s dictator, from recluse to smooth-talking statesman has heightened interest in the country but not really shaken the fundamental sense of bewilderment when trying to make sense of it. Oliver Wainwright, the Guardian’s architecture critic (and the brother of The Economist’s Britain editor), who has compiled his photographs from a week-long visit to Pyongyang in 2015 into a glossy coffee-table book published by Taschen, starts from this initial sense of strangeness. He describes wandering around Pyongyang as moving through a series of stage sets from North Korean socialist-realist operas, where every view is carefully arranged to show off yet another monument or apartment building. But his eye is also alive to what the city, which was originally planned by a Soviet-trained architect, has in common with other places that were influenced by Soviet aesthetics. You can halt aging without punishing diets or costly drugs. You just have to wait until you’re 105. The odds of dying stop rising in people who are very old, according to a new study that also suggests we haven’t yet hit the limit of human longevity. The work shows “a very plausible pattern with very good data,” says demographer Joop de Beer of the Netherlands Interdisciplinary Demographic Institute in The Hague, who wasn’t connected to the research. But biodemographer Leonid Gavrilov of the University of Chicago in Illinois says he has doubts about the quality of the data. As we get older, our risk of dying soars. At age 50, for example, your risk of kicking the bucket within the next year is more than three times higher than when you’re 30. As we head into our 60s and 70s, our chances of dying double about every 8 years. And if you’re lucky enough to hit 100 years, your odds of making it to your next birthday are only about 60%. But there may be a respite, according to research on lab animals such as fruit flies and nematodes. Many of these organisms show so-called mortality plateaus, in which their chances of death no longer go up after a certain age. It’s been hard to show the same thing in humans, in part because of the difficulty of obtaining acccurate data on the oldest people. So, in the new study, demographer Elisabetta Barbi of the Sapienza University of Rome and colleagues turned to a database compiled by the Italian National Institute of Statistics. It includes every person in the country who was at least 105 years old between the years 2009 and 2015—a total of 3836 people. Because Italian municipalities keep careful records on their residents, researchers at the institute could verify the individuals’ ages. “These are the cleanest data yet,” says study co-author Kenneth Wachter, a demographer and statistician at the University of California, Berkeley.

You can halt aging without punishing diets or costly drugs. You just have to wait until you’re 105. The odds of dying stop rising in people who are very old, according to a new study that also suggests we haven’t yet hit the limit of human longevity. The work shows “a very plausible pattern with very good data,” says demographer Joop de Beer of the Netherlands Interdisciplinary Demographic Institute in The Hague, who wasn’t connected to the research. But biodemographer Leonid Gavrilov of the University of Chicago in Illinois says he has doubts about the quality of the data. As we get older, our risk of dying soars. At age 50, for example, your risk of kicking the bucket within the next year is more than three times higher than when you’re 30. As we head into our 60s and 70s, our chances of dying double about every 8 years. And if you’re lucky enough to hit 100 years, your odds of making it to your next birthday are only about 60%. But there may be a respite, according to research on lab animals such as fruit flies and nematodes. Many of these organisms show so-called mortality plateaus, in which their chances of death no longer go up after a certain age. It’s been hard to show the same thing in humans, in part because of the difficulty of obtaining acccurate data on the oldest people. So, in the new study, demographer Elisabetta Barbi of the Sapienza University of Rome and colleagues turned to a database compiled by the Italian National Institute of Statistics. It includes every person in the country who was at least 105 years old between the years 2009 and 2015—a total of 3836 people. Because Italian municipalities keep careful records on their residents, researchers at the institute could verify the individuals’ ages. “These are the cleanest data yet,” says study co-author Kenneth Wachter, a demographer and statistician at the University of California, Berkeley.